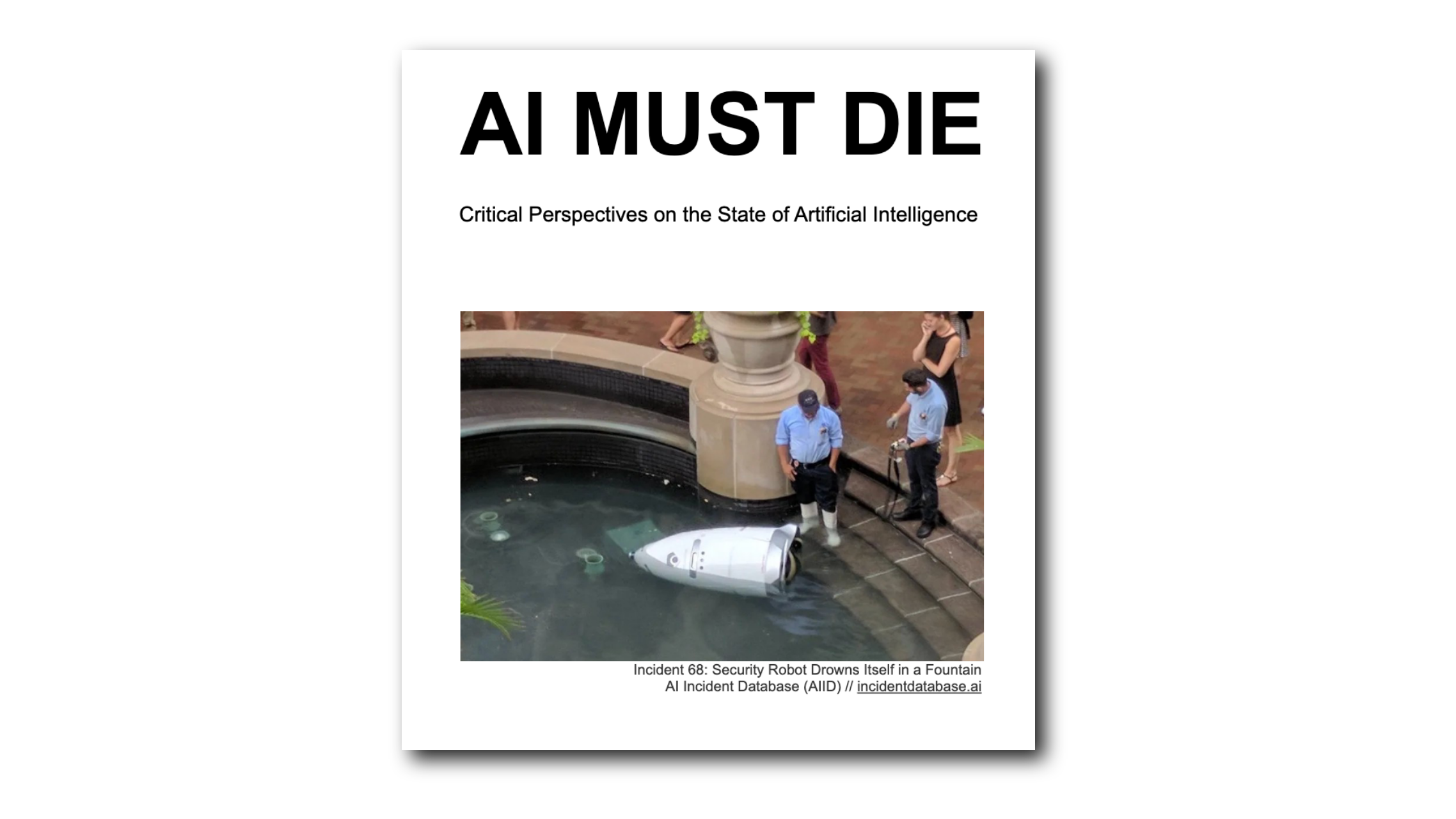

**AI Must Die!**

*Critical Perspectives on the State of Artificial Intelligence*

*AI MUST DIE* is a short zine by *Myke Walton* and *Cam Smith* that presents critical perspectives on the way that AI is talked about, governed, and owned in 2025.

> *AI is fucking everywhere: at work, at school, in our homes, in our phones, on our streets, in our governments, and at war. We are living through an era of extreme AI hype. In this climate, some people have gotten rich off of the stolen data and stolen labor that fuels these technologies. Others have been surveilled, oppressed, exploited, and killed. This text presents a short, practical guide to the technologies currently called “AI,” the ideologies and actors pouring gasoline on the AI dumpsterfire, and what we can do about it.*

> ...

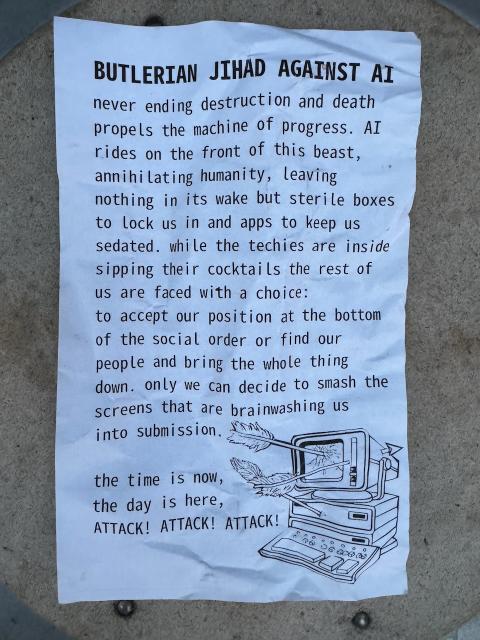

> *How long will this go on? When will the hype cycle end? Is another AI winter coming? Faced with an inhumane tech industry and complicit governments it may ultimately fall to the people to invent new ways to evade, refuse, resist, sabotage or otherwise destroy AI.*

> *Hammers up! AI Must Die!*

> *Seize the means of computation*

Read the full zine here:

The Zine | AI MUST DIE!