Apropos of nothing in particular,

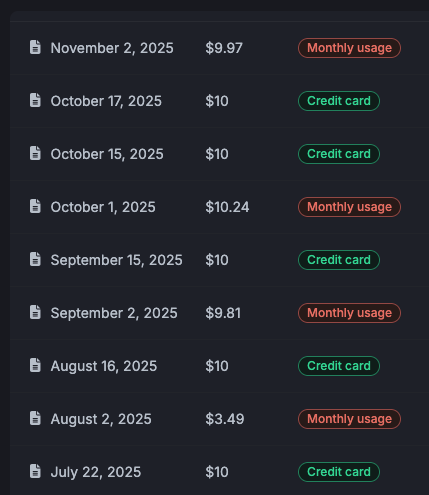

A few months ago, someone on the fediverse (I forgot who) mentioned that they were using bunny.net as their CDN. About 3 months ago I started moving all my important sites to it, and I'm pretty happy. Super cheap, has been plenty reliable.

Every time I've put in a support request, (which has been 5 or 6 in the last 3 months) a human who knew what they were doing answered me within an hour.

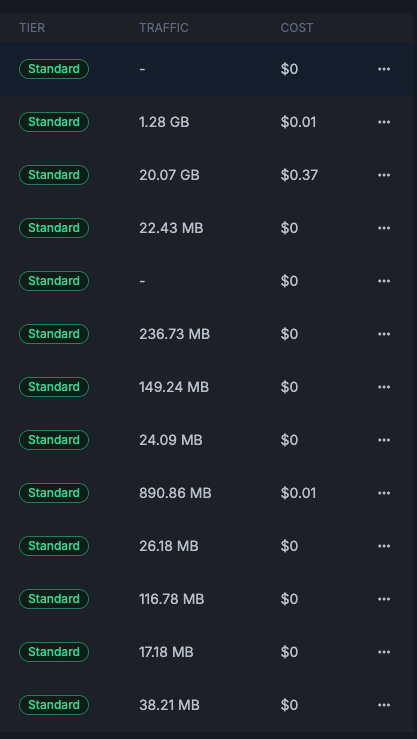

On my one large site (1K active users) it's doing a good job with bots. I pay $10/month for their "shield" service on just that one domain and it works. The other domains are pennies.

And yes, that's an affiliate link. If you click it I get brownie points or something. I've had 2 clicks ever. Click it. I dare you.

#LLM #aisecurity

#LLM #aisecurity