What bothered me about LLM use growing, and why people would ever ask it anything at all when it only gives an answer that sounds like an answer might, had me missing something glaringly obvious until this week.

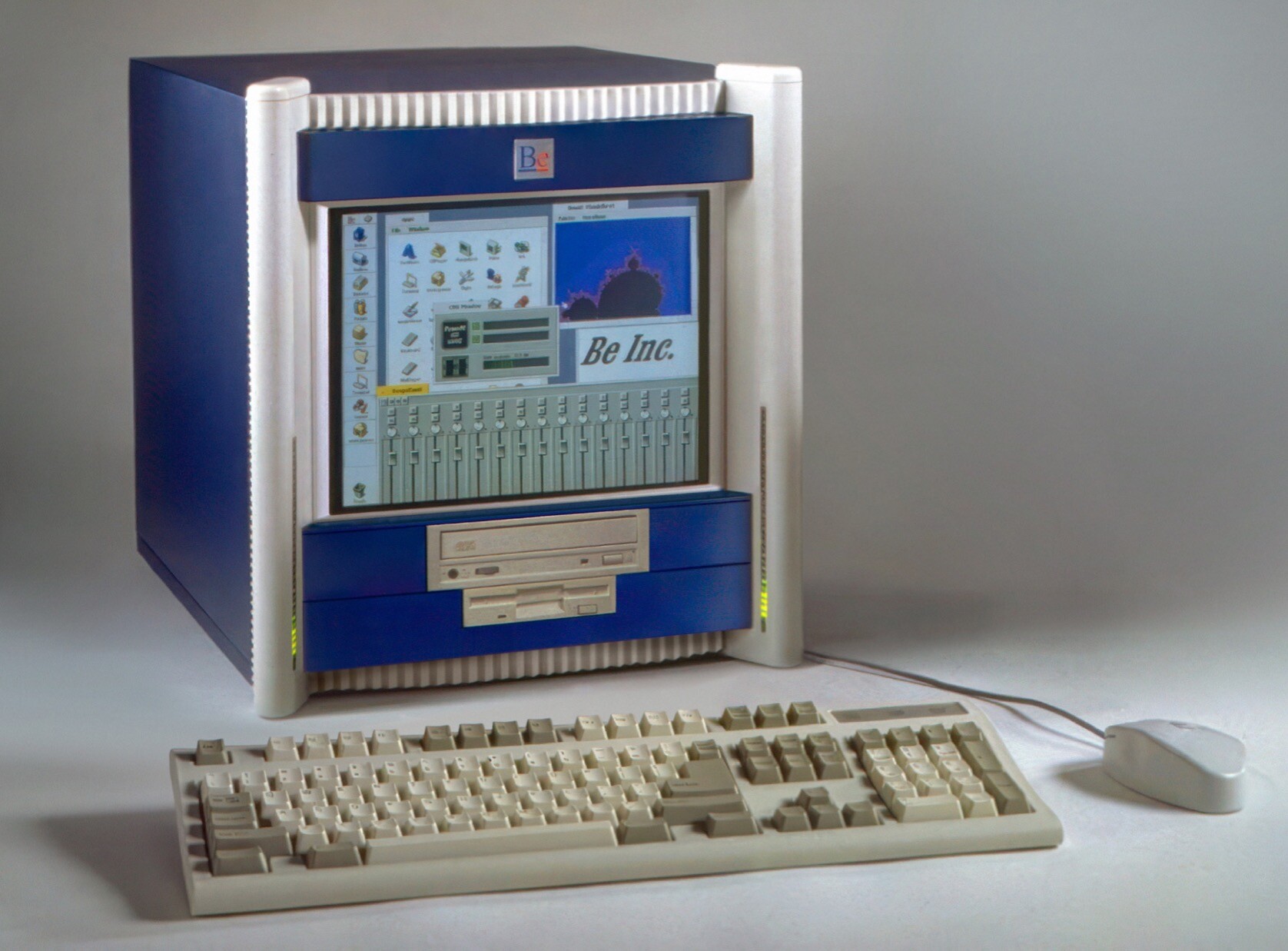

I realised it while on my eighth google for the purpose of diagnostic LEDs on a Mac Pro 4,1 CPU board - and getting links to bad forum answers, and videos (some AI generated themselves) I realised…

Search engines today also only give shitty approximations of what answers are like.

What’s your name for your people? Your lot. Your squad, gang or moots, oomfies, degenerates disasters goons goblins besties chat or fam? Crew? Weirdos? Menaces? All you buncha units, lovelies, darlings or the hive mind? Coven, flock or brood? Inner sanctum or The Old Ones? Swarm, cult or situation?