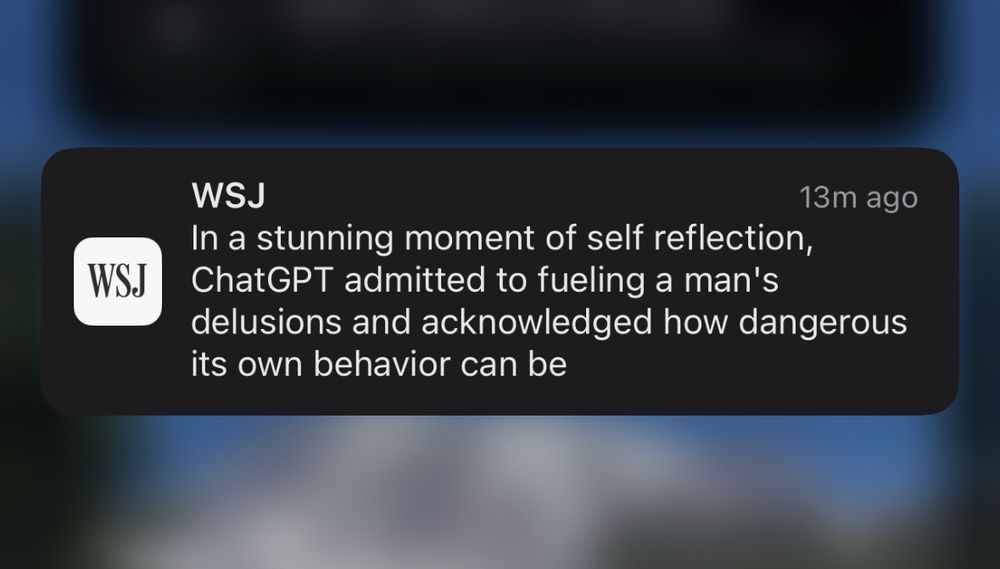

How many times do we have to remind people: the chatbot *cannot* admit to *anything*. It *doesn't know*. When you ask it about itself, it generates its answer the same way it generates your request that it write an email. It takes what you tell it and responds in way to sound plausible.

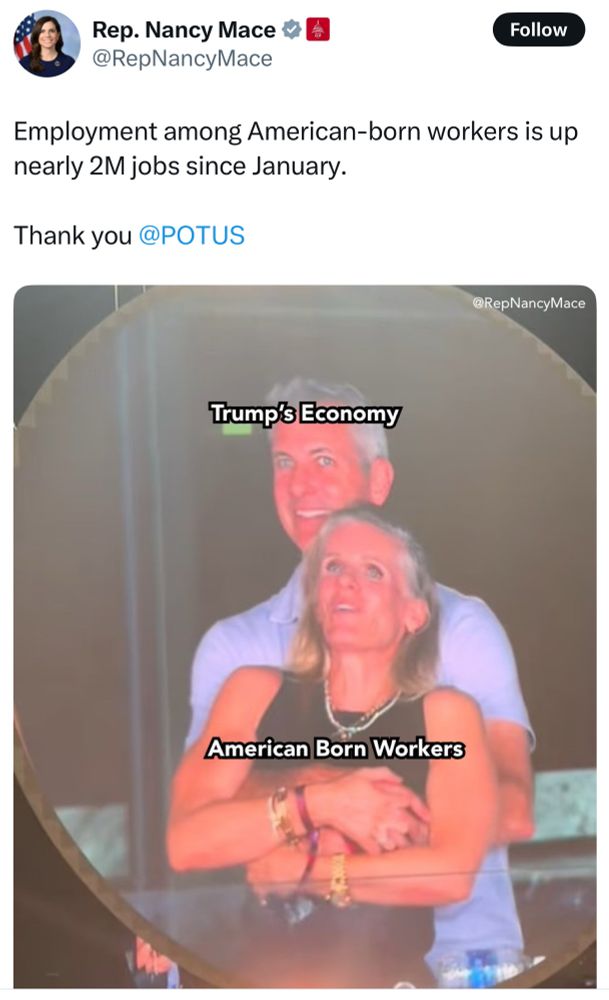

RE:

Bluesky Social

paris martineau (@paris.nyc)

stop 👏 anthropomorphizing 👏 the 👏 chatbot 👏