Has anyone compiled the privacy policies of various LLM platforms, ideally in a comprehensive way? OpenAI said today that private health data and conversations shared by ChatGPT Health won't be used for training purposes. Does this mean OpenAI won't sell it either or give it to law enforcement when presented with a warrant? What about other AI chat services.

I'm looking for responses from experienced privacy professionals or advocates with empirical data. Please, no responses airing cynicism or grievances about AI privacy in general, no matter how valid.

https://openai.com/index/introducing-chatgpt-health/

Dan Goodin

Dan Goodin

npub1z3lw...qvzu

Reporter covering security at Ars Technica. DM me on Signal: DanArs.82.

Site:: https://arstechnica.com/author/dan-goodin/

The International Association of Cryptologic Research has cancelled the results of its annual leadership election after an official lost an encryption key needed to unlock results stored in a "hyper-secure election system."

Cryptographers Held an Election. They Can’t Decrypt the Results.

A global group of researchers was unable to read the vote tally, after an official lost one of three secret code keys needed to unlock a hyper-secu...

Thoughts about this Microsoft advisory?

"Additionally, agentic AI applications introduce novel security risks, such as cross-prompt injection (XPIA), where malicious content embedded in UI elements or documents can override agent instructions, leading to unintended actions like data exfiltration or malware installation."

Experimental Agentic Features - Microsoft Support

I could really benefit from experts' analysis of this WSJ article reporting that China-backed hackers used Anthropic’s Claude to automate 80% to 90% of a September hacking campaign targeting corporations and governments.

There aren't a lot of specifics, but among those provided:

> The effort focused on dozens of targets and involved a level of automation that Anthropic’s cybersecurity investigators had not previously seen.

> In this instance 80% to 90% of the attack was automated, with humans only intervening in a handful of decision points.

> The hackers conducted their attacks “literally with the click of a button, and then with minimal human interaction."

> Anthropic disrupted the campaign and blocked the hackers’ accounts, but not before as many as four intrusions were successful.

> In one case, the hackers

> directed Claude tools to query internal databases and extract data independently.

> “The human was only involved in a few critical chokepoints, saying,

> doesn’t look right, Claude, are you sure?’”

> ‘Yes, continue,

> ’ ‘Don’t continue,

> ’ ‘Thank you for this information,

> ’ ‘Oh, that

> Stitching together hacking tasks into nearly autonomous attacks is a new step in a growing trend of automation that is giving hackers

> additional scale and speed.

We've seen so many exaggerated accounts of AI-assisted hacks. Is this another one? Are there reasons to take this report more seriously? Any other thoughts?

https://www.wsj.com/tech/ai/china-hackers-ai-cyberattacks-anthropic-41d7ce76

Wow, the lack of ANY factual support for such a claim amounts to hyperbole. And from a CEO peddling a service to "guarantee content integrity." File this one under "Umbrella salesman predicts torrential rain."

https://www.wgcu.org/science-tech/2025-09-23/detection-expert-says-hackers-likely-used-ai-to-penetrate-airport-system

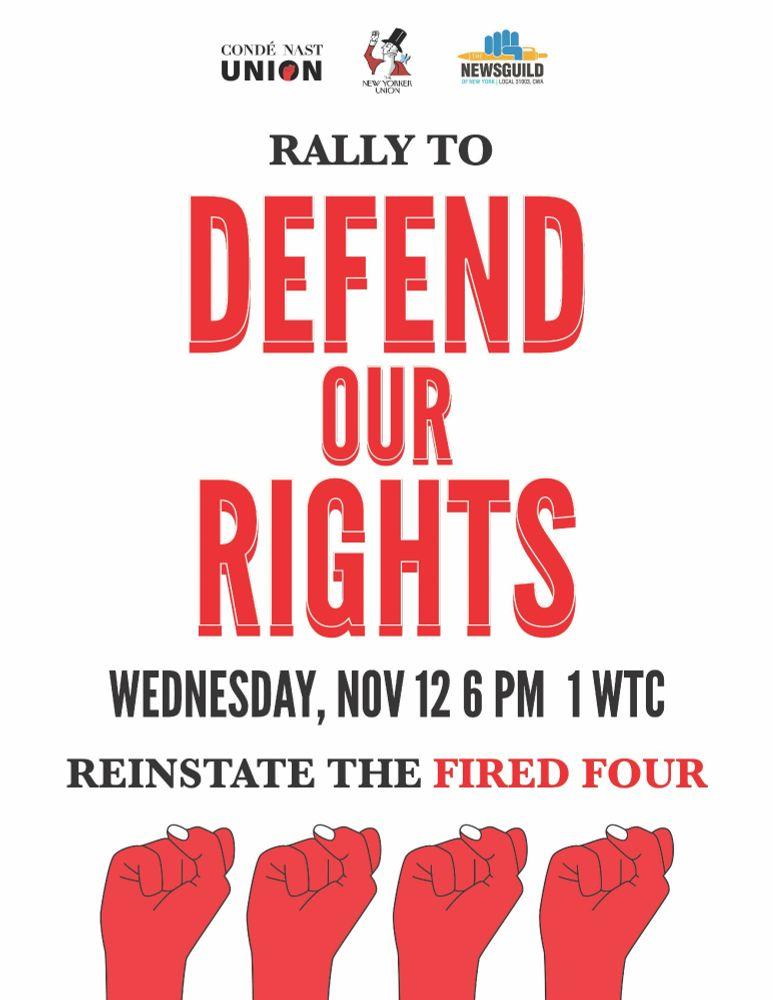

ICYMI: 4 Conde Nast employees were illegally fired for exercising permitted speech in their workplace. Tonight, NY AG Letitia James will call out this union-busting move by Conde management. Please attend. Please also sign our petition to reinstate our fired colleagues.  .

Please boost for reach.

.

Please boost for reach.

Tell Condé bosses to reinstate the Fired Four, reverse the suspensions and end the union-busting

On Nov. 5, 2025 in an egregious attempt at union-busting, Condé Nast management illegally terminated four union members – Alma Avalle, Jake Lahu...

"The former executive of Trenchant who pleaded guilty this week to selling his company's software hacking tools to a zero-day broker in Russia, sold at least one of these tools to the Russian firm even after learning that a previous tool he sold the broker was being used by a South Korean broker – indicating that the stolen tools were being passed on to others downstream."

A reminder that if you're not following @npub1gxm0...hhnu you should be.

ZERO DAY

Former Trenchant Exec Sold Stolen Code to Russian Buyer Even After Learning that Other Code He Sold Was Being "Utilized" by Different Broker in South Korea

The former executive of Trenchant who pleaded guilty this week to selling his company's software hacking tools to a zero-day broker in Russia, sold...

People working on post-quantum-proofing vulnerable encryption protocols (and curious onlookers) can find lots of value in this new post from Cloudflare. It discusses the herculean engineering challenges of revamping anonymous credentials that will be broken by a quantum computer. There's a growing need for this kind of privacy (for instance to make digital drivers licenses privacy preserving), which allows individuals to prove specific facts, like they have had a drivers license for more than 3 years, without divulging personal information like their birthday or place of birth. The long and short of of the challeng is that engineers can't simply drop quantum-resistant algorithms into AC protocols that currently use vulnerable ones. Instead, engineers will need to collaborate with standards bodies that build entirely new protocols, largely from scratch. The post goes on to name a few of the most promising approaches.

https://blog.cloudflare.com/pq-anonymous-credentials/

AMD, Intel and Nvidia have poured untold resources into building on-chip trusted execution environments. These enclaves use encryption to protect data and execution from being viewed or modified. The companies proudly declare that these TEEs will protect data and code even when the OS kernel has been thoroughly compromised. The chipmakers are considerably less vocal about an exclusion that physical attacks, which are becoming increasingly cheap and easy, aren't covered by the threat model These physical attacks use off the shelf equipment and only intermediate admin skills to completely break all TEEs made from these three chipmakers.

This shifting Security landscape leaves me asking a bunch of questions. What's the true value of a TEE going forward?. Can governments ever get subpoena rulings ordering a host provider to run this attack on their own infrastructure? Why do the companies market their TEEs so heavily for edge servers when one of the top edge-server threats is

physical attacks?

People say, "well yes. just run the server in Amazon or another top tier cloud provider and you'll be reasonably safe." The thing is, TEEs can only guarantee to a relying party that the server on the other end isn't infected and couldn't give up data even even if it was. There's no way for the relying party to know if the service is in Amazon or in an attackers's basement. So once again aren't we back to just trusting the cloud, which is precisely the problem TEEs were supposed to solve?

Ars Technica

New physical attacks are quickly diluting secure enclave defenses from Nvidia, AMD, and Intel

On-chip TEEs withstand rooted OSes but fall instantly to cheap physical attacks.